Mental Compression of Space and Time

I have a friend who loves walls of text and weird ideas, whom I've been bouncing wild theories and ideas off for several years now. A gem, to be sure. He holds me to a certain level of intellectual honesty by pointing out when, whether correct or not, I've veered into more baseless spit-balling, and will relent only if and when I provide adequate source material or other reasonable justification for a given assertion.

This keeps me at least hovering around "informed but not scientifically rigorous", which is good enough for someone not involved in any actual scientific publishing who just wants to share some hopefully novel or inspiring ideas.

We tend to have a lot of similar ways of thinking about things and come to many similar conclusions - though certainly not always - but eventually found we often got hung up on odd misunderstandings even when we actually generally agreed on something. This itself isn't really odd or notable, but over time and many hours of hashing things out to figure where the glitch was, we realized that the way we misunderstood each other had an apparently consistent, if not universal, underlying pattern.

The particular difference in the way we see things is somewhat difficult to describe, but essentially his cognition works more in nouns, and mine in verbs. He's horizontal and I'm vertical. He thinks in terms of things in space, while I see systems and processes moving in time.

This may give some insight into the odd difficulty math people and programmers have understanding each other despite the foundational overlap in their crafts as well, and my friend is more of a math person, historically, while I'm a seasoned tech nerd and programmer by trade.

This can be slightly better illustrated by thinking of the differences in mathematical equations and code. You might visualize a white board full of Greek letters and scrawlings, or even nice graphs of functions, but ultimately they're static definitions. Even functions, with the implied verb-like nature, are symbolizing a full set of results defined by the formula, but only the formula itself, or symbol of the process, is presented. They're not really bound by time (even if time is one of their variables) but sit in spatial terms, including limits.

Code, on the other hand, is also a series of glyphs, but these glyphs are written in such a way as to define an explicit process. Despite the variables present, there will be only one viable execution path through the code, as it "moves", vs the many or infinite outcomes implied by the symbolic math formula. Code ignores space, which doesn't make sense in terms of execution (except RAM/database usage, which is a side effect), and utilizes time, or movement and change, through execution. Running code is perhaps like pushing a math formula through a narrow maze to find a particular path to the end, rather than defining the maze itself as an equation would.

Switching gears, I ran into similar issues of misunderstanding, or at least the realization of very different and apparently opposite modes of perception and cognition, with my partner. When we'd watch movies, he constantly comments on aspects of production, fiddles with the sound equalizer, or points out very subtle nuances in framing, lighting, or other aesthetic aspects of the film - but often forgets to notice the plot, or gets bored during long strings of dialog where the scene was otherwise largely unchanging.

From my perception, I can switch focus and see the qualia (if not so well or automatically as him), but naturally don't even think to look typically, it's a strictly intentional act. It all sort of blends into a vibe, which is taken in and gives subtle feelings, like foreboding music as something creepy is happening, but I let it sort of wash over me - I let myself be immersed in it, losing myself in the story rather than looking at it. At some point I realized this was, more or less, the same distinction as with my math friend. My partner sees the nouns, while I see the verbs. He sees the encoding that defines the aesthetic, while I lose myself in it to fully experience it.

Having now piqued my curiosity, I dove into trying to understand this further, and realized it likely tied into my left/right brain observations, at least in part, but I wanted to see how it worked more, and perhaps how each of us could better nurture the opposite mode if desired. I bombarded them with a selection of images, videos, and other media samples, along with a few other hapless test subjects, by which I attempted to isolate various distinct sensory effects and note where the overlaps and anomalies in reported perception occurred.

If you read my other article, or are otherwise familiar, you'll probably guess that the more right brained someone presents as (at least in the model I'm using), the more noun-like their perception and cognition is, the more they focus on aesthetics and qualia, while the more left brained loses the forest and sees the trees - the moving interactions, plot shifts, and dialog. The more interesting bit, however, is that the other half more or less disappears, the senses just "glide over it", or it just fades into the background environment, regardless of whether you're a forest person or a trees person.

How well you can see both depends on various factors, though the ability to tune into both is pretty apparently the optimal mode, or at least being able to shift between them easily in a sort of zooming in and out mentally. Programmers who work on larger and more complex applications have to do this zooming in and out as a matter of course, as well as any profession that involves both detailed and logical, well-ordered aspects (code, syntax, logic), as well as big-picture aspects with webs of interaction (side effects, libraries, systems involved). Some professions are more biased towards one or the other, and perhaps some don't require much of either.

Another friend who studies meta-cognition extensively speaks at length on the idea of "information compression", which is a form of abstraction. Just as it's very difficult (or impossible) to manually play every sound in a song in your head individually, it's very simple to hear the song as a single-thread of execution playing all at once (for those not lacking internal audio).

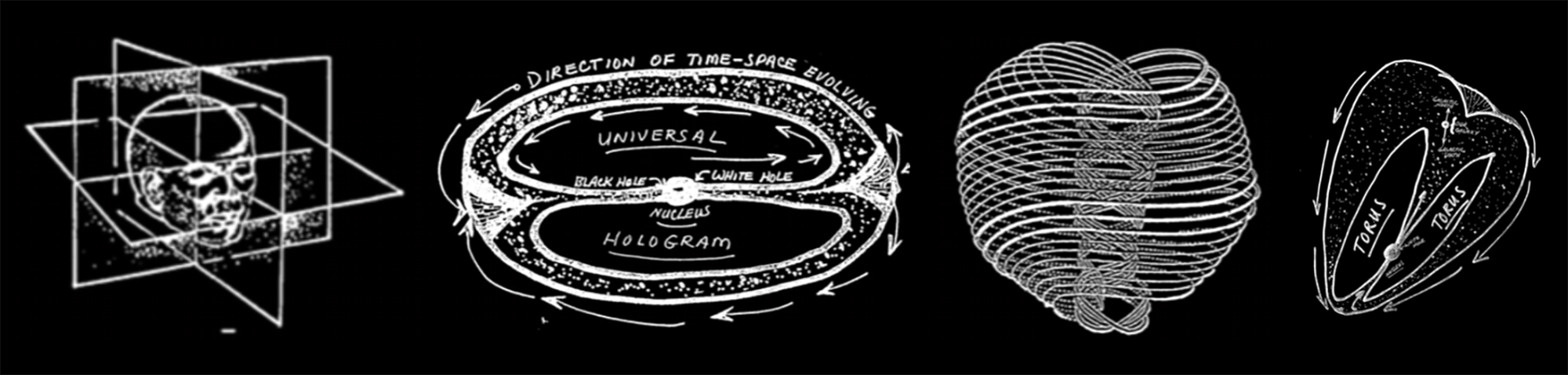

When we speak of a planet, we do not define every grain of sand or drop of ocean water, nor every physical force such as gravity and magnetic fields, though all of these things and more are bundled into the full definition of a planet implicitly. We call this "implication space", and the more we pack into a higher abstraction concept, the more things we can move with ease, as each thing is always one mental unit, no matter how much "compressed information" is hiding in its implication space.

It eventually hit me, that the invisibility of the spatial / noun-like or temporal / verb-like qualities of perception, was probably an automatic form of compression. It leaves our conscious processing, and hides in implication space, present and with some (subconscious) influence, like a subliminal message, but not actively perceived. In this way, two people can look at or discuss a given thing and both fail to see half the picture, or even that they're attempting to describe something very similar, much less the same thing. (One may choose to correlate this to another potentially false dichotomy involving a different "left" and "right" bias, but I digress).

The lot of this is abstractly reminiscent of Heisenberg's uncertainty principle, which states that it is impossible to measure or calculate exactly both the position (space) and the momentum (time) of an object. This principle is based on the wave-particle duality of matter, which has a similar split, with waves being functions, or change over time, while particles have the particular quality of taking up space, and are what moves through space, over time.

Now, perhaps we can zoom around, and even see both halves at once to some extent, depending on one's mental dexterity and training, but it seems consistent with Heisenberg that the more we zoom in one direction, the more we lose of the other, so perhaps this is just the way our minds adhere to one of the most fundamental principles in nature? Of course one reason we can't measure both location and momentum has to do with reference frames, and measuring location (which involves triangulation of some sort) vs velocity (the rate which sometime moves relative to another object or reference frame) - though this is obviously a vastly simplified version of the relativity and physics involved.

We see this in graphs, and having to select data that moves over time similarly relative to all data points (eg: stock value changes), or shows many data points at one point in time (eg: pie charts), or the extra axes get out of hand quickly leading to confusion. We can even make things suggest different things, sometimes quite wildly different, depending on how we model and present the same data and what axes we select.

As a fun exercise, or if you doubt the veracity of these mental space-time theories, give it a shot yourself. Focus as much as you can on the aesthetics of a movie, every camera angle, the slight echo in the soundtrack, the way they keep shifting the camera to hint around something in the background of the scene - and see if you can still follow the plot if there's dialog or other left-brained interaction at the same time.

Then flip and try the other way, and see if the aesthetics don't just magically disappear when not looking at them, or when focusing intently on discerning if the dialog has multi-layered meaning or is giving cues with subtle shifts of tone or facial expression as two characters interact, or a character interacts with something in their environment. The key in the time or plot side, is to watch interactions, verbs, what things are doing, not their presentation or characteristics, and vice versa.

Observe which mode of perception you're more naturally drawn to and attempt to process the other very thoroughly.

Try to play a song in your head by playing each note or staff separately, rather than as a coherent pre-recorded type playback. Left brain processing seems far more constrained to one dimension, aptly enough relating to the single dimension of time. Attempt to sum 3 numbers simultaneously without taking the sum of 2 then adding the 3rd in a linear fashion, and the left brained orderly logic can't do it. Visualize several shapes and move several at once, and this may be challenging, but is possible, and is a more right brained (unordered, symbolic) function.

It won't always be 100% this or that, but there are apparent boundaries, and some mental manipulations are far more challenging, even exhausting to try. Try to find new methods and structures to test ways you can mentally model information and move it around, and which feel difficult to hold and or keep track of, which disappear, and other glitches or tricks. Find ways I'm wrong and tell me about them!

I've found a handful of other axes where we can observe this mental space vs time, particle vs wave, left vs right, or forest vs trees effect, whether mildly or more directly obvious as with me and my cohorts, but I'm sure they're essentially endless, once noticed. I'd suggest going into forest mode to some extent during interactions to look for them, and let me know if you find any cool examples, eh?

Comments ()